AI: Computer That Mistook a Lion for a Library

Before the second decade of the 21st century ends we’re to face the automated transport and surveillance system in our cities and towns. The AI is partly employed already in traffic control and surveillance but it is backed up with human resources. Soon however this situation will change. That’s why the critical issue is to teach the computer to recognize the environment.

This task has become possible due to the new Deep Neural Network or DNN. This is the second generation of the ANN – Artificial Neural Network. What is it? In a nutshell any neural network is a computer that reacts to external inputs, such as images, sounds, etc., rather than to commands. The processor of such computer is programmed the way that imitate the neural connections in the human brain.

The task in question involves so complicated math algorithms, that even Jeff Clune an assistant professor of computer science at the University of Wyoming admits, “We understand that they work, just not how they work”. And it seems that the AI has inherited not only the advantages of the human brain but the disadvantages of it as well.

The main task for the AI is to recognize images. An automated vehicle, for instance, has to tell a passer-by from a pole or an animal. To teach the Ai to recognize, say, a lion, the system is shown many pictures of the predator in various environments backed up by the affirmative, “This is a lion”. And when shown a picture of a cat, the system should answer, “This is NOT a lion”. The modern DNNs can recognize the objects with 99% accuracy. The AI precise performance makes possible the face-recognition identification in smartphones!

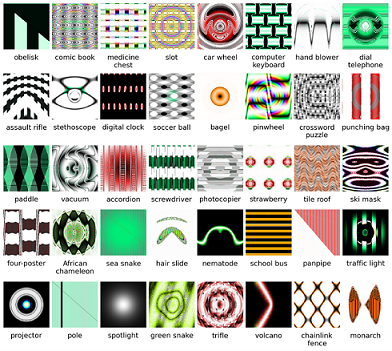

But how exactly does the AI do the trick? It picks up just the most characteristic features of the image or anchors. The problem is what anchors the system chooses. Three researches in computer science, Nguyen A, Yosinski J, Clune J., decided to look into the matter and answer this question. They change the pictures, adding white noise to them or presenting just rings and stipes of different shapes and colors.

These pictures look to us as the art objects, and we can classify them when given labels only. But for the computer they are as clear as day. For example, the school bus is recognizing by the alternating colors of yellow and black. The DNN picks up some most prominent features and gives the answer. The wrong answer. So, a white and blue school bus won’t be recognized as such but a bee that is careless enough to fly too close to a camera’s lens, can be mistaken for a school vehicle. Just imagine such an AI aboard of a fully automated vehicle!

As I’ve pointed out before, the AI has inherited the human brains disadvantages as well. You see, we don’t see with our eyes, we see with our brain. That’s why we can mistook a bush for a sitting dog as it happened to a friend of mine who is terribly afraid of dogs by the way. She mistook the vegetation for an animal in the dusk, but soon understood her mistake. But some people just can’t. The famous neurologist Oliver Sacks described back in 1980s the man who mistook his wife for a hat. The poor soul was suffering from the visual agnosia, a specific malfunction of the human brain that blocks recognizing the objects. So, the AI is this very patient. It can’t see its own mistake and say, “Oh, stupid me, it’s just a bush, not a dog!”

Further studies revealed the far more terrible truth: it’s enough to add some noise to otherwise normally looking pictures to boggle the AI. And though we, humans, see a lion, the AI will think it’s a library and stands its ground. In the series of experiments, the DNN-based AI mistook a lion for a library.

When the noise is added deliberately, it’s one thing, though a creepy one for it opens the door to the cybercrimes, but the ever changing environment is the other. The slightest change in light, shadows or color temperature determined by the position of the sun can trigger the mistake. The AI will have to deal not with the chain of static images but with the video.

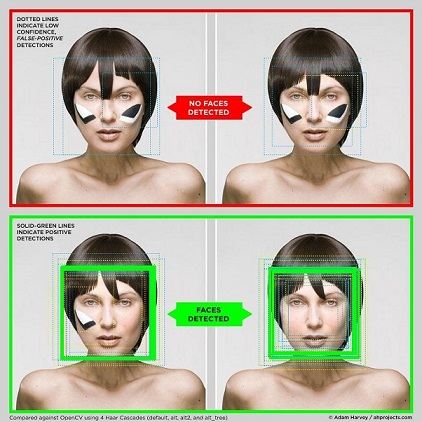

This hole in software is already exploited by those who want to fool the facial recognition. The modern systems are very sophisticated and can look through black glasses, wide-brimmed hats and scarves. But a bit of a make-up does the trick.

Source of image: cvdazzle.com

You can hardly not recognize your friend, or co-ed, or neighbor even with the weirdly painted face, while the AI will miss the face completely without even knowing it.

But let’s go back to the AI which is soon to operate the cars and other transport. The regulations issued by the Department of Transportation stresses the cybersecurity of the aboard system so that nobody could hack the vehicle computer and gain the control of it. The regulations also go for the military automated system security standards, but the problem is that no such standards are developed for DNNs and image recognitions systems. At least, no standards that I managed to learn of.

And that’s not the only problem that will arise after the introduction of the robot cars to our streets. Stay tuned!

Facebook

Twitter

RSS