History of Microprocessors [Video]

Video uploaded by professorgstatla on August 24, 2010

The Microprocessor

By 1970, engineers working with semiconductor electronics recognized that then the number of components placed on an integrated circuit was doubling about every year. Other metrics of computing performance were also increasing, and at exponential not linear, rates. C.Gordon Bell of the Digital Equipment Corporation recalled that many engineers working in the field used graph paper on which they plotted time along the bottom axis and the logarithm of performance, processor speeds, price, size or some other variable on the vertical axis. By using a logarithmic and not a linear scale, advances in the technology would appear as a straight line, whose slope was an indication of the time it took to double performance or memory capacity or processor speed. By plotting the trends in IC technology, an engineer could predict the day when one could fabricate a silicon chip with the same number of active components (about 5,000) as there were vacuum tubes and diodes in the UNIAC – the first commercial computer marketed in the United States in 1951. A cartoon that appeared along with Gordon Moore

But even as Moore recognized, that did not make it obvious how to build a computer on a chip or even whether such a device was practical. That brings us back to the Model T problem that Henry Ford faced with mass-produced automobiles. For Ford, mass production lowered the cost of the Model T, but customers had to accept the model as it was produces (including its color, black), since to demand otherwise would disrupt the mass manufacturing process. Computer engineers called it the “commonality problem”: as chip density increased, the functions it performed were more specialized, and the likelihood that a particular logic chip would find common use among a wide variety of customers got smaller and smaller.

A second issue was related to the intrinisic design of a computer-on-a-chip. Since the time of the von Neumann Report of 1945, computer engineers have spent a lot of their time designing the architecture of a computer: the number and structure of its internal storage registers, how it performed arithmetic, and its fundamental instruction set, for example. Minicomputer companies like Digital Equipment Corporation and Data General established themselves because their products’ architecture was superior to that offered by IBM and the mainframe industry. If Intel or another semiconductor company produced a single-chip computer, it would strip away one reason for those companies’ existence. IBM faced this issue as well: it was an IBM scientist who first used the term architecture as a description of the overall design of a computer. The success of its System/360 line came in a large part from IBM’s application of microprogramming, an innovation in computer architecture that had previously been confined mainly to one-of-a-kind research computers.

The chip manufacturers had already faced a simpler version of this argument: they produced chips that performed simple logic functions and gave decent performance, though that performance was inferior to custom-designed circuits that optimized each individual part. Custom-circuit designers could produce better circuits, but what was crucial was that the customer did not care. The reason was that the IC offered good enough performance at a dramatically lower price, it was more reliable, it was smaller, and it consumed far less power.

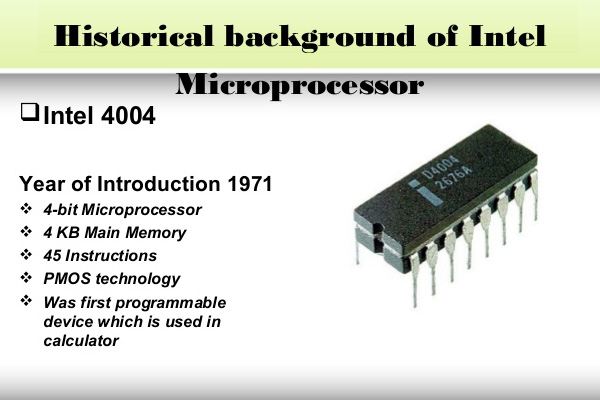

Historical Background of Intel: 4004 Microprocessor. Image source: slideshare.net.

It was in that context that Intel announced its 4004 chip, advertised in 1971 trade journal as a “microprogrammable computer on a chip.” Intel was not alone; other companies, including an electronics division of the aerospace company Rockwell and Texas Instruments, also announces similar products a little later. As with the invention of the integrated circuit, the invention of the microprocessor has been contested. Credit is usually given to Intel and its engineers Marcian Ted Hoff, Stan Maxor, and Federico Faggin, with an important contribution from Masatoshi Shima, a representative from a Japanese calculator company that was to be the first customer. What made the 4004, and its immediate successors, the 8008 and 8080, successful was its ability to address the two objection by also introducing input-output and memory chips, which allowed customers to customize the 4004’s functions to fit a wide variety of applications. Intel met the architect’s objection by offering a system that was inexpensive and small and consumed little power. Intel also devoted resources to assist customers in adapting it to places where peviously a custom-designed circuit was being used.

In recalling his role in the invention, Hoff described how impressed he had been by a small IBM transistorized computer called the 1620, intended for use by scientists. To save money, IBM stripped the computer’s instruction set down to an absurdly low level, yet it worked well and its users liked it. The computer did not even have logic to perform simple addition; instead, whenever it encountered an “add” instruction, it went to a memory location and fetched the sum from a set or precomputed values. That was the inspiration that led Hoff and his colleagues to design and fabricate the 4004 and its successors: an architecture that was just barely realizable in silicon, witj good performance provided by coupling it with detailed instructions (called microcode) stored in read0only-memory (ROM) or random-access-memory (RAM.

(Excerpt from Computing, a Concise History, by Paul E. Ceruzzi)

Links

- A presentation on Evaluation of Microprocessor – slideshare.net

- Looking for sell used electronic devices online? iGotOffer is the best place!

- Everything About Microsoft’s Products – The complete guide to all Microsoft consumer products, including technical specifications, identifiers and other valuable information.

- Everything About Apple’s Products – The complete guide to all Apple consumer electronic products, including technical specifications, identifiers and other valuable information.

Facebook

Twitter

RSS